When sizing fonts with CSS, there’s a rule of thumb that states:

1em = 12pt = 16px = 100%

There are even handy tools that help you calculate sizes based on this rule.

But this rule of thumb leaves out a very important piece of information. And without that information, you could be left wondering why the size of your type doesn’t look right.

Testing Points and Ems

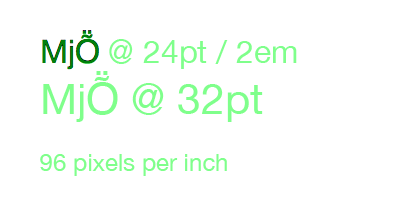

To illustrate, let’s take a look at some work I’ve been doing recently to measure text accurately on the web. I have a simple test page that displays text in a single font at various sizes:

<!DOCTYPE html> <html lang="en"> <head> <meta charset="utf-8" /> <title>Em Test</title> <meta name="generator" content="BBEdit 10.5" /> <style> body { font-family: "Helvetica Neue", sans-serif; font-size: 100%; line-height: 1; } p { padding: 0; margin: 16px 0 16px 0; } </style> </head> <body> <p style="font-size: 2em; background-color: #eee;">MjṎ @ 24pt / 2em</p> </body> </html> You can view a slightly more complex version of the page here.

The W3C recommends specifying fonts using either ems or pixels. Since high resolution displays are becoming ever more common, that really leaves you with just one choice: the em.

(Note: In the examples that follow, I’m using text set at 2em since it’s easier to see the problem I’m going to describe. The effect, however, will happen with any em setting.)

The body is set in Helvetica Neue at 100% so 1em will be the equivalent of 16px when the user’s browser is using a default zoom setting. The line-height is 1 so there’s no additional padding around the text: the default of “normal” would make the line approximately 20% taller.

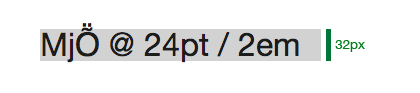

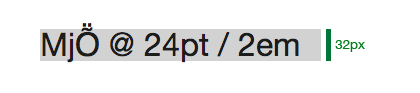

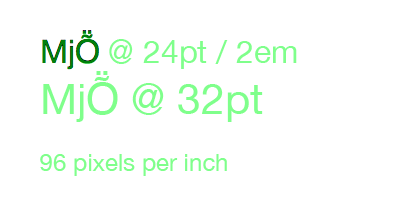

When you display the test page, everything looks good because the sizes are all scaled correctly relative to each other. A quick check of the light gray background with xScope shows that the height of the paragraph element is 32 pixels (2 ems):

But then things got confusing.

Points aren’t Points

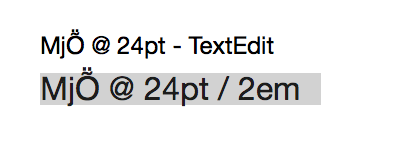

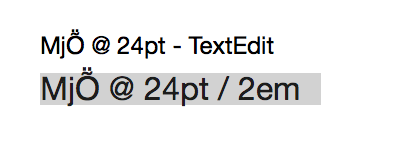

I had just been measuring some text in TextEdit and noticed something was amiss. Comparing the two “24 point” fonts looked like this:

I’m no typography expert, but there’s something clearly wrong here: a 24pt font in TextEdit was noticeably smaller than the same size in my web browser.

I confirmed this behavior in three browsers running on my Mac: Safari/Chrome (rendering with WebKit) and Firefox (rendering with Gecko) displayed the larger version of 24 point text. Why were the sizes different?

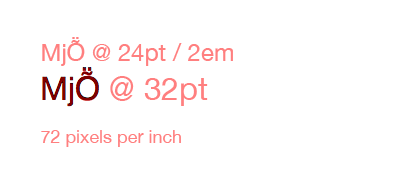

After some experimentation, it appeared that rendered glyphs were from a 32pt font:

What the hell?

A Brief History of Type

When confronted with a problem like this, it’s always a good idea to question your assumptions. Was I measuring the right thing? So I started learning more about type…

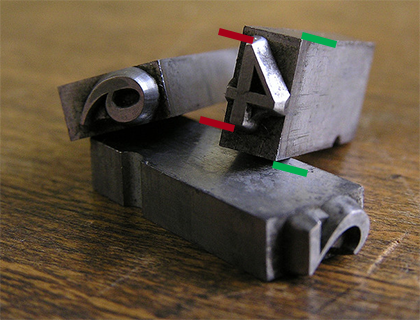

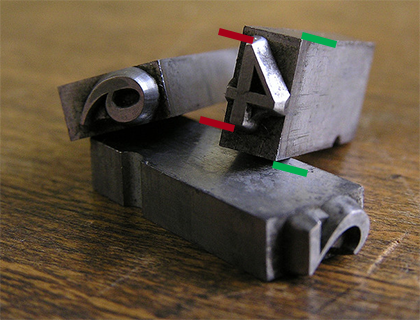

In general, points are meaningless. It’s a relic of the days of metal type where the size specified the height of the metal, not the mark it made on the page. Many people mistake the size of a glyph (shown below with red bars) with the size of a point (green bars):

(Photo by Daniel Ullrich. Modifications by yours truly.)

Even things like the word “Em” got lost in translation when moving to the web: it originally referred to the width of a typeface at a given point size. This worked in the early days of printing because the metal castings for the letter “M” were square. Thankfully, we’re no longer working in the 16th century.

In modern fonts, like Helvetica Neue, a glyph for a capital “M” may be square, but the flexibility of digital type means that the width and height of a point rarely match.

Thanks Microsoft!

After doing this research, I was sure I was measuring the right thing. The sizes of the glyphs should match, regardless of point size. Something else was clearly in play here.

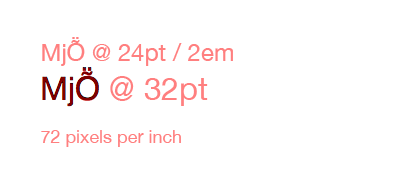

Let’s just say I spent a lot of quality time with Google before eventually stumbling across a hint on Microsoft’s developer site. The document talks about using a default setting of 96 DPI. I’ve been spending a lot of time lately with the Mac’s text system, so I knew that TextEdit was using 72 DPI to render text.

I quickly opened a new Photoshop document and set the resolution to 96 pixels per inch (DPI). And guess what?

All the text got larger and it matched what I saw in my browser. Mystery solved!

72 DPI on the Mac is 75% smaller than 96 DPI used by the Web. So the 24pt font I was seeing in TextEdit was 75% smaller than the 32pt font used in the browser.

Further research about how 96 DPI is used on the web turned up this Surfin’ Safari post on CSS units written by Dave Hyatt back in 2006:

This is why browsers use the 96 dpi rule when deciding how all of the absolute units relate to the CSS pixel. When evaluating the size of absolute units like pt browsers simply assume that the device is running at 96 CSS pixels per inch. This means that a pt ends up being 1.33 CSS pixels, since 96/72 = 1.33. You can’t actually use the physical DPI of the device because it could make the Web site look horribly wrong.

That’s another way to think about this problem: a single point of text on your Mac will be 1.33 times larger in your browser.

Now What?

Now that we know what caused the problem, how can we avoid it?

The key piece of information that’s missing in the “1em = 12pt = 16px = 100%” rule is resolution. If you’re specifying point sizes without also specifying resolution, you can end up with widely varying results. For example, each of these is “24 points tall”:

If you’re lucky enough to have a Retina Display on your Mac, you’ll be rendering text at 144 DPI (2 × 72 DPI). That’s 20% larger than the last example above (Windows’ 120 DPI setting.) Display resolution is increasing and so are the number of pixels needed to represent “a point”.

Note that you can’t “fix” this problem by specifying sizes in pixels. If you specify a font size of 16px, your browser will still treat that as 12pt and display it accordingly.

As a designer or developer, you’ll want to make sure that any layout you’re doing for the web takes these size differences into account. Some apps, like Photoshop, allow you to specify the resolution of a document (that’s how I performed my sizing tests.) Make sure it’s set to 96 DPI. Resolution may not matter for exporting images from Photoshop, but it will make a difference in the size of the text in your design comps.

Most other Mac apps will rely on Cocoa’s text system to render text, so if there’s no setting to change the document’s resolution, a default of 72 DPI should be assumed.

An alternative that would suck is to start doing your design and development work on Windows where the default is 96 DPI. That setting also explains why web browsers use this resolution: remember when Internet Explorer had huge market share?

And finally, expect some exciting new features for measuring, inspecting and testing text in your favorite tool for design and development. I’m doing all this precise measurement for a reason :-)

Updated February 25, 2014: It turns out Todd Fahrner first identified this problem back in the late 1990′s. His work with the W3C was instrumental in standardizing on 96 DPI. What’s depressing is that I knew about this work: Jeffrey Zeldman and I even developed the Photoshop filters mentioned on his site. Maybe I should send in my AARP membership form now.